The concept of latent space plays a crucial role in the vast world of data science and artificial intelligence. Often described as a hidden dimension where data representations reside, latent space is essential in various applications, including machine learning, natural language processing, and image generation. This article will explore latent space, how it works, and its significance in modern technology.

What is Latent Space?

Latent space refers to a compressed representation of data, capturing the essential features of the input while discarding the less important details. Consider it a condensed book version; while you might lose some nuances, the main ideas remain intact. This compressed representation allows algorithms to process and understand complex data more efficiently.

Characteristics of Latent Space

- Dimensionality Reduction: Latent space typically has fewer dimensions than the original data, making it easier to work with.

- Feature Extraction: It emphasizes the most critical features, allowing for better generalization in machine learning models.

- Continuous Representation: Data points in latent space are often represented continuously, facilitating smooth transitions and interpolations between data points.

How Does Latent Space Work?

Understanding how latent space functions requires delving into several key concepts:

- Representation Learning

At the heart of latent space is representation learning, a type of machine learning that focuses on finding the best way to represent data. By learning efficient representations, algorithms can better capture the underlying patterns and structures within the data.

- Neural Networks

Intense learning models often use neural networks to create and navigate latent space. These models consist of layers of interconnected nodes that learn to transform input data into a lower-dimensional space.

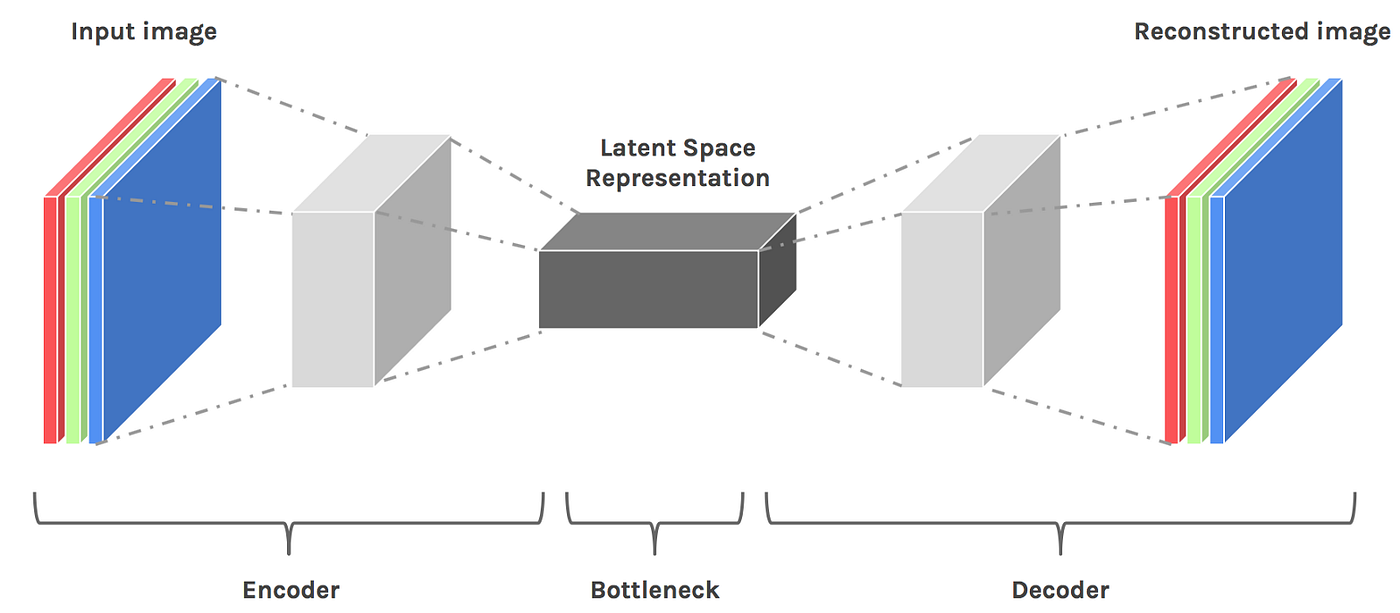

- Encoder-Decoder Architecture: The encoder-decoder architecture is a popular method for creating latent space. The encoder compresses the data into latent space while the decoder reconstructs it into its original form.

- Variational Autoencoders (VAEs): VAEs are a type of neural network designed to generate new data points by sampling from latent space. They provide a powerful way to explore and manipulate data.

- Training Latent Space

Training a model to learn latent space involves the following steps:

- Data Preparation: The first step is to collect and preprocess the data. This may involve normalizing values, handling missing data, and ensuring the dataset is representative.

- Model Selection: It is crucial to choose the exemplary neural network architecture. This decision depends on the type of data and the intended application.

- Training: The model learns to map input data to latent space and back during training. This process involves optimizing parameters using techniques like backpropagation and gradient descent.

- Visualization of Latent Space

Visualizing latent space can provide valuable insights into how data is represented. Techniques like t-SNE (t-distributed Stochastic Neighbor Embedding) and PCA (Principal Component Analysis) can help project high-dimensional data into two or three dimensions for more straightforward interpretation.

- Clustering: Data points similar in the original space will be close together in latent space, allowing for natural clustering.

- Interpolation: Latent space provides for smooth transitions between data points, enabling the generation of new, plausible examples.

Applications of Latent Space

Latent space is not just an abstract concept; it has real-world applications across various fields. Here are some of the most notable uses:

- Image Generation

In computer vision, latent space is essential for generating new images. Techniques like Generative Adversarial Networks (GANs) use latent space to create realistic pictures from random noise.

- DeepFakes: Latent space enables the generation of realistic deepfake videos by manipulating facial features and expressions.

- Style Transfer: By altering the latent representations of images, artists can apply different styles to photographs, transforming them into unique works of art.

- Natural Language Processing

Latent space also plays a significant role in natural language processing (NLP). Techniques like word embeddings represent words as vectors in latent space, capturing semantic relationships.

- Word2Vec: This popular model maps words to latent space, quickly calculating word similarities and analogies.

- Sentence Embeddings: Sentences can also be represented in latent space, facilitating tasks like sentiment analysis and text classification.

- Recommender Systems

Recommender systems, such as those used by Netflix or Amazon, leverage latent space to predict user preferences. By analyzing user behavior and item characteristics, these systems can place users and items in the same latent space, making it easier to recommend relevant content.

- Drug Discovery

In the pharmaceutical industry, latent space is used to explore potential drug candidates. Researchers can identify promising molecules for further testing by representing chemical compounds in latent space.

- Virtual Screening: Latent space can facilitate the virtual screening of vast chemical libraries, speeding up drug discovery.

- Drug Design: Researchers can design new compounds with desired properties by navigating latent space.

The Importance of Latent Space in Machine Learning

Latent space is a cornerstone of modern machine-learning techniques. Its importance can be summarized in several vital points:

- Efficiency: By reducing the dimensionality of data, latent space allows algorithms to operate more efficiently, saving time and computational resources.

- Improved Performance: Models that leverage latent space often achieve better performance as they focus on the most relevant features of the data.

- Flexibility: The continuous representation of data in latent space enables smooth transitions and transformations, making it adaptable to various applications.

Challenges and Limitations of Latent Space

Despite its many benefits, working with latent space presents some challenges:

- Interpretability

Understanding what each dimension in latent space represents can be difficult. This lack of interpretability can hinder making informed decisions based on model outputs.

- Overfitting

When models are trained too closely to the training data, there’s a risk of overfitting. This can result in a model that performs well on known data but could improve on unseen examples.

- Data Quality

The effectiveness of latent space depends heavily on the quality of the input data. More curated datasets can lead to accurate representations, affecting the model’s overall performance.

Future Directions of Latent Space Research

As technology continues to evolve, so will the understanding and application of latent space. Here are some potential future directions:

- Enhanced Visualization Techniques

Researchers are likely to develop more advanced visualization techniques that allow for better interpretation of latent space, making it easier to understand complex data representations.

- Integration with Other Technologies

Latent space could be integrated with other technologies, such as augmented reality and virtual reality, to create immersive experiences that leverage rich data representations.

- Cross-Disciplinary Applications

As the importance of data-driven decision-making grows, latent space could find applications in a broader range of fields, from social sciences to environmental studies.

Conclusion

In summary, latent space is a powerful concept that supports many modern machine learning and artificial intelligence applications. Providing a compressed data representation allows for efficient processing, better generalization, and enhanced performance across various fields. Exploring latent space will undoubtedly lead to exciting discoveries and innovations as technology advances.

Whether you’re a seasoned data scientist or just starting your journey, understanding latent space is critical to unlocking the potential of data in today’s world. So, embrace the hidden dimensions of data and explore the possibilities latent space offers.